Psycholinguistics/What is a Word?

Words are one of the most complex and powerful resources we as humans have at our disposal. As Geoffrey Hughes remarks, "people who are normally shrewd will be persuaded by banal advertising copy; those who are normally politically inert or pacifist can be mobilized to die for a slogan" (1988). It is only natural that we should be curious about the words we use, but the system is not simple,

Introduction

editThe problem with asking "What is a Word?" is that there isn't really an answer. "Word" is not a technical term, and it can mean a number of things. In typing, a word is the characters between spaces. But in a polysynthetic language (e.g. Yupik), a single word by that description can carry the meaning of a whole sentence. Is it still a word? Some linguists consider individual morphemes (including bound morphemes) as words, in that they have meaning alone and designate word category. While this is a minority view (most linguists would consider even a whole sentence-worth of meaning as a single word in a polysynthetic language), there is still ample ground for disagreement and consideration between languages as similar as English and German. The German word "einfamilienhaus" means in English "single family house." This is a compound word formed from three parts: how many words is it, and is the number different in English, even though the components have the same meanings and order? Even in a single language, the answer is not clear. Take the sentence, “I walk to the place where he walks, soon we will be walking together.” Three versions of the verb “walk” appear: “walk,” “walks,” “walking.” Are they three different words or just variations of one word? Obviously this has a huge effect on estimating the number of words in a language, or even the number of words a single person knows. Oldfield (in Lively et al., 1994) suggested that the average adult probably knows around 75 000 words. Crystal (2006) points out the difference between active and passive vocabulary: active vocabulary is the list of words a person uses comfortably, and passive vocabulary is the list of words a person is familiar with enough to understand, but wouldn’t use in his or her own speech or writing. For example, all speakers of English would easily use the word “cat,” but might not refer to “verisimilitude” with such ease (even if they understand its meaning).

This chapter aims to give a detailed discussion of words. It will talk about words themselves (their composition and classification as signs), the meaning of a word, relationships between words, ways of classifying words, the frequency of certain words, and changes in the lexicon that happen over time.

What Are Words Made Of?

editAt their most elemental level, words are made up of sound units called phonemes. A phoneme is the smallest contrastive unit of language, i.e. the smallest unit that can make a difference to meaning. The words “bat” and “rat” differ by only one phoneme, yet their meanings are distinct. Phonemes on their own have no inherent meaning: they only mean something when combined with other phonemes into longer strings. Brown (1958) gives the example of the English suffix "-er" which can mean either the agent of an action (e.g. "driver") or a comparative form of an adjective (e.g. "nicer"). Clearly, there is nothing about the sounds in "-er" which has independent meaning. The next largest unit we can divide words into is the syllable. “Bat” has one syllable, “battle” has two and “battlement” has three.

Some syllables (or groups of syllables) have meaning on their own and can be attached or removed from a word (called a base) in the process of affixation. This is morphology: the study of morphemes, which are units of meaning (usually) smaller than a word that combine to form larger words. Morphemes can be categorized in two ways. The first is bound vs. free morphemes. Free morphemes can exist on their own as words and have meaning. An example would be “nation.” Bound morphemes cannot function alone in the sentence and must attach to a free morpheme (or base), changing its meaning and often its syntactic category (see section on word classification). English has many of these, for example –s, which combines with verbs to give the third person singular present form (e.g. “walk” becomes “walks”), or –al, which turns a noun to an adjective (e.g. “nation” becomes “national”). These two examples bring us to the other dimension for classifying morphemes (Hudson, 2007). The former is called an inflectional morpheme: it does not change the meaning of the word in any way, but is present for grammatical reasons only. “She walk” obviously means the same as “she walks,” but is not grammatically acceptable. The second example is a derivational morpheme: its function is not grammatical but changes the meaning of the word and often the syntactic category. Here, the noun “nation” becomes the adjective “national,” which means “pertaining to a nation.” All inflectional and derivational affixes in English are bound morphemes, and most of the bases they attach to are free morphemes.

An example of concatenative morphology might look like this, where each cell in the table represents a different morpheme. This shows how words are formed by a process, rather than memorized individually (here, the noun "nation" becomes the third person present form of the verb "nationalize").

| Base | Derivational Affix | Derivational Affix | Bound Inflectional Affix |

| Nation | al (→ adjective) | ize (→verb) | s (→3rd singular present) |

Here the issue of “what is a word” again appears: surely it is silly to consider each inflectional form of every verb of a language as a separate word (each English verb has either three or four inflectional forms and most languages have many more), but it is also silly to consider “nation” and “national” as the same word (not to mention “nationalize,” “nationalization,” etc.). So word-ness depends on whether a word’s morphological processes are derivational or inflectional.

This is where it is possible to have an exact definition: while “word” is a somewhat unclear concept, “lexeme” is not. A lexeme can be created by derivational affixation, which is why “national” is a different lexeme from “nation.” A lexeme has a distinct meaning and can be modified grammatically through inflectional affixation, which is why “nation” and “nations” are the same lexeme and differ only in number. Pinker (1999) explains the concept of listeme: an item that must be memorized (as part of the list of words one knows) because its meaning cannot be deduced based on its components. An example would be the verb "walk," or the "-s" that is added to make it third person singular. In the table above, each morpheme is also a listeme: it shows how words are made up of separate parts that have independent meaning and are combined into larger units that do not need to be individually memorized.

Words as Arbitrary Signs

editThe study of words is part of the study of semiotics, or symbols. There are three types of symbols: the icon, the index and the sign. While the icon and index are based on representation or indication of a concept respectively, the sign is the only truly arbitrary symbol of a concept. A simple way to think about it is with traffic: a slippery road sign is an icon, with an image of a car skidding on the road; a crumpled car on the side of the road is an index of a recent accident; a yield sign is a true sign- there is nothing about the white and red triangle that inherently means yield, just as there is nothing inherent in the sounds of the word “dog” that mean “a furry quadruped with a tail, etc.”. In his lectures on linguistic signs, the famous linguist Ferdinand de Saussure defined the sign as being composed of the signifier and the signified. In the case of a dog, the signifier is the word “dog” or “chien” or “Hund” or whatever, and the signified is the speaker and listener’s mental representations of the concept of a furry quadruped with a tail (Saussure, 1974). The arbitrariness of the relationship between words and their referents (or signified) is clear when we see how different the three previously mentioned words for “dog” are across languages. Even in onomatopoeic words, which supposedly are less arbitrary, there is no inherent relationship. Dogs in English say both “bow-wow” and “woof”—they sound nothing alike!

The above diagram demonstrates the relationship between signifier and signified. You can see how they are both integral parts of the sign (represented by the oval containing both signifier and signified), and also how this relationship works in both directions. Awareness of one part of the sign entails knowledge of the other part.

Semantics

editSemantics is the study of what words mean. A word’s denotation, also called conceptual meaning (Yule, 2006), is its exact meaning (as in “furry quadruped with a tail” above). This is what you will find in a dictionary. Words often also have connotations associated with them, which are more subjective and evaluative aspects of meaning. For example, the difference between “slender” and “skinny” is one of connotation: “slender” has a positive connotation, whereas “skinny” has a negative connotation. The same could be said of “frugal” and “cheap.” Yule (2006) also identifies associative meanings of words, which are secondary concepts associated with words. For example, “slender” might have an associative meaning of “pretty,” while “skinny” might have an associative meaning of “unhealthy.” Often a big semantic difference between words is that of register. Register is the formality level of a word- a word like “dude” belongs to an informal register, whereas “sir” is a higher, more formal register.

Meaning is not a simple thing. Some words are very easily linked to a concept; a violin, for instance, is always a violin and not a cello or a dill pickle or racism. But how sure can we be that that word and only that word refers to a violin and only a violin, and that all speakers of English conceive of the concept in the same way? Aitchison (2003) points out the difference between the “fixed meaning assumption” and “fuzzy edges.” She cites evidence from Labov (1973, in Aitchison, 2003), who asked people to identify an object as a cup, a vase or a bowl and found that the results varied greatly and changed based on what was inside the container, to prove that words do not have fixed meanings in the minds of all speakers but fuzzy edges that depend on all sorts of factors. Look at the two images to the right. The first is designed for drinking tea even though it has no handle. Is it a cup or a bowl? The second is an ancient bowl from Cyprus. Is it still a bowl, even though it has a handle?

Some words refer to concrete things, that is things that can be perceived through sensory input. But other words refer to abstract things, such as racism, philosophy, or love. There is evidence to suggest that abstract words are harder to understand than concrete words. Schwanenflugel (1991) explains three theories for why this is: first, the dual-coding theory, posited by Paivio et al. (1971, 1986, in Schwanenflugel, 1991), which suggests two parallel systems (the logogen, or verbal system, and the imagen, or image system). Concrete words have stronger attachment to the imagen system and therefore activate both systems, whereas abstract words tend to activate only the logogen system. The second theory is the age of acquisition hypothesis (Gilhooly and Gilhooly, 1979, in Schwanenflugel, 1991), which states that because abstract words are acquired later in language development than concrete words, they have a lower frequency (and therefore take longer to understand) because subjects have less exposure to them. Third, there is the context availability model (Bransford and McCarrell, 1974; Kieras, 1978; Schwanenflugel and Shoben, 1983, all in Schwanenflugel, 1991), which argues that comprehension of words is based on information retrieval from the subject's knowledge base. This retrieval is slower as a result of weaker connections to contextual knowledge in abstract words than in concrete words.

Aitchison (2003) also discusses a widely-held theory in psycholinguistics, that the mind contains a “prototype” for each word that it considers to be the best example of that concept. So for the word “cat,” most people would associate that with a domestic house cat before extending it to include a tiger or a cheetah. Having a prototype in mind enables a speaker to extend the label to something that shares characteristics of the prototype, even if it is unfamiliar (Brown, 1958). Rosch and Mervis (1975, in Hampton, 1991) posit a "family resemblance score" with which an item's typicality compared to the prototype can be correlated.

Word Relationships

editIn their discussion of Saussure, Silverman and Torode (1980) say that “signs have value only by the differences between them,” meaning that words only have meaning based on what other words don’t mean and on the way they are combined with other words with different meanings. This is a helpful way of looking at language: words, while they may be the building blocks, only have meaning if one is familiar with the language more generally. This section focuses on the comparisons and relationships between words.

The cat example in the previous section brings us to an issue with trying to find the meaning of a word: what if a word has more than one meaning? This is a fairly common phenomenon called lexical ambiguity. Take the word “bat.” It can mean either a small flying animal or an item used in sports to hit a ball. This is called homonymy: a single word has two completely separate definitions that share the same phonological and orthographic shape (Yule, 2006). What about the word “head”: it can mean a body part, an important person at a company, one end of a bed, etc. This is an example of polysemy-these words are related in meaning, so they are considered just one word with multiple senses (Yule, 2006). Copestake and Briscoe (1997) identify two types of polysemy: constructional polysemy, in which one sense is contextually specific, and sense extension, in which the two senses are simply related and do not depend on context. The correct meaning can be inferred from the context, but how does this occur? Simpson (1994) outlines three basic models for how the mind deals with ambiguous words. Context-dependent models hold that only contextually relevant meanings are activated by the brain. Context-independent models say that activation of a particular meaning is not based on context but something else, often word frequency. The multiple access model proposes that all meanings are activated and processed, and only after this processing is the appropriate meaning selected. See the chapter on Lexical Access for more on this topic. Murphy and Andrew (1993) found that context influenced which antonym (see below) subjects chose for polysemous words, supporting the context-dependent model.

Two different words that share a meaning are called synonyms (Yule, 2006). Synonyms almost always have a different connotation or register, or slightly different associative meaning, leading most linguists to the conclusion that perfect synonymy does not exist. It is inefficient: there is no reason to remember two words with exactly the same usage and meaning, so when this situation arises one word falls out of use and the other takes over (see layering, below) or one word comes to have a more specialized meaning than the other. In English, this is often the case where an Anglo-Saxon word is joined by a Latin borrowing, giving us pairs like “chew” and “masticate.”

Two words that have opposite meanings are called antonyms. Murphy and Andrew explain that "words have opposites only if one dimension of their meaning is particularly salient, and if there is a word that is equivalent except for its value on that dimension" (1993). Antonyms can be gradable (meaning they take place along a scale, can be used comparatively and the opposite of one does not entail the other), for example “big/small” or “happy/sad.” Antonyms can also be non-gradable, like “dead/alive,” where the opposite of “alive” always means “dead.” A third type of antonym is called a reversive, where one word means the reverse of the other, like “enter/exit” (Yule, 2006). Antonyms provide us with a fertile ground for euphemism, for example saying someone is “not a small person” (i.e. “a big person”).

Another way words relate to each other is through hyponymy. Although hyponymy is not well known, it is very common. Hyponymy refers to the degree of specificity of a word; a hyponym is more specific than a corresponding hyperonym, so a “rose” is a hyponym of “plant”, whereas “plant” is a hyperonym of “rose” or “orchid”. This way of looking at words emphasizes the hierarchical relationship between them (Yule, 2006), and relates to the idea of a prototype for each word. To go back to the cat example, above, this diagram shows the hyponymy relationship between those words.

Bengal Tiger

↑

Tiger

↑

Cat

↑

Mammal

↑

Animal

Word Classifications

editLinguists categorize words to attempt to understand how they are organized in the brain. The parts of speech children are taught in school are a simplified version of this categorization. A part of speech is a syntactic category, which tells us what role a word plays in a sentence. Words are classified technically by class and by category; both will be discussed here.

Word class refers to whether a word serves a grammatical function or a lexical function. In other words, whether a word carries some of the content of the sentence or helps to string the content words together so we can understand their role. In the sentence “The bear climbed up the tree to get a piece of fruit,” the words “bear,” “climbed,” “tree,” “get,” “piece” and “fruit” are all content words, and “the,” “up,” “the, “to, “a” and “of” are function words. Another way to look at these words is as open or closed class. Open class words are nouns, verbs, adjectives and sometimes adverbs—more words can be added to this class as the need arises, and words can fall out of usage fairly quickly. Closed class words are function words—prepositions, personal pronouns, determiners, etc. that are fixed in a language. The class can’t be added to and it takes a very long time for any change to occur. This is why both French and Italian both use “tu” as a second person singular personal pronoun (in subjective case)—this has not changed since French and Italian were both dialects of Latin. This is also the reason why no matter how hard anyone tries, there will probably never be a singular gender-neutral third person personal pronoun in English to replace “he” or “she,” and why the usage of plural “they” for this purpose is likely here to stay (sorry, English teachers!).

Syntactic categories are another way of classifying words. All languages have syntactic categories. There are three clearly defined categories: nouns, verbs and adjectives. There are also numerous other small categories, like prepositions, determiners, articles, adverbs, conjunctions, etc. that are a matter of great debate among linguists and vary more cross-linguistically. Categories are usually taught based on their members (e.g. a noun is a person, place or thing, a verb is an action word), but are more accurately determined based on their role in a sentence. A noun is the only category that can be the subject of a sentence, or the object of a verb, as in “The dolphin ate the fish,” where “dolphin” and “fish” are the subject and object, respectively. A verb is the only category that can dictate what other positions must be filled for a sentence to be complete, as in “I gave the cake to my mom,” where the verb “gave” requires a subject, direct object and indirect object. All sentences have a verb, even one-word sentences like “Go!” where there is no noun in subject position (although semantically there is still a subject, it is just a rule of English that grammatical subject is not realized in syntax for verbs in the imperative mood). This tree diagram shows a simple sentence composed of a noun phrase and a verb phrase. Notice how even though the sentence doesn't make any sense, it is still grammatical because each position is filled by a word of the right syntactic category. The word category is labelled for each word, and all are open-class words.

The section on morphology, above, explained how syntactic category can be changed through derivational morphology, but morphology is not always necessary for this. Category can also be changed through a process called conversion, or zero-affixation, where a word (without changing its form at all) can fill a different role in the sentence and thus be part of a new category in addition to the old one (Adams, 1973). An example of this is “They tabled the motion,” where “table” (a noun) is being used as a verb. The verb "sleep" in the diagram above can also be used as a noun in the process of conversion, as in "have a good sleep." Further discussion of changes to words can be found in the section New Words and New Uses.

Word Frequency

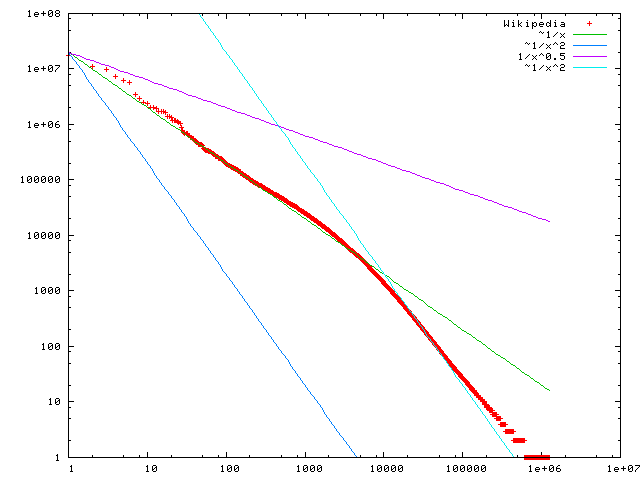

editAs mentioned above, the average person is more likely to have “cat” as part of their active vocabulary than “verisimilitude.” This is obviously because of frequency: there are many more reasons to talk about cats than verisimilitude in everyday life. It doesn’t take a sophisticated study to determine that some words are more common than others, and many people have made lists of the most common words, both in specific languages and cross-linguistically. These don’t always line up perfectly: the most common word in English is “the” but for languages where use of the definite article determiner is not as robust as in English this would not be the case. All languages have words for food, people, water and some other basic concepts, and usually the more basic a concept is to human life and society, the more common it is cross-linguistically. The difficulty of determining the most frequent words is that speech and written language would yield different results. Given the difficulty, though, of recording all the words (or even a tiny portion of them) spoken by many people in many different parts of the world who speak the same language, from many different social groups, classes, ethnicities, age groups, etc., most of the available data are based on analyzing a corpus of writing. In speech, the pronouns “I” and “you” would likely be far more common than they are in writing. This graph shows the frequency of words used on Wikipedia in 2006. You can see that the first five are used significantly more frequently than the rest, and as frequency goes down the frequency difference between adjacent words goes down too. In other words, there is a greater difference in frequency of use between very commonly used words than there is between less commonly used words.

Frequency of a word has an impact on its retrieval time in the brain. Lively et al. (1994) report findings from Savin (1963) showing that people identified high-frequency words faster than low-frequency words from a background of white noise. Luce et al. (1984, in Lively et al., 1994) found that less acoustic input was required for people to recognize high-frequency words and Luce (1986, in Lively et al., 1994) found that lexical decision times were faster for high-frequency words. This, together with the active and passive vocabulary phenomenon, show that high-frequency words are more readily and easily accessed in the brain than low-frequency words, and are understood faster.

New Words and New Uses

editThe beauty of words is that we are all in control of them: everyone who is a competent speaker of a language is able and allowed to produce new words when needed and to alter the meaning of existing ones, and as long as grammatical rules are followed these new words will be understood even by people who have never heard them before (which is the power of derivational morphology, and shows that affixes must be stored separately in the brain from bases because they are constantly used and understood in novel combinations). A morphological process separate from affixation is that of compounding, as discussed in the introductory paragraph, where two words are joined and become one word with a meaning distinct from both of them. “Water bottle,” “flower-pot” and “racetrack” are all examples of compounds.

A nonce formation is a word created for one-time use by a speaker. If I describe a song as “Black Sabbath-esque,” even if you have never heard that word before you know what I mean. This nonce word is unlikely to catch on in common English, but it can happen that coinages are adopted into the everyday language. Aitchison (2003) outlines three criteria necessary for adoption: lots of examples, specific meaning, and morphological transparency (easily deduced meaning).

Different from a nonce formation but fulfilling a similar motivation is the concept of "transfers of meaning", described by Geoffrey Nunberg (1997). He gives the example of handing a valet your car keys and saying "This is parked out back." Clearly, it is not the thing you handed him (your keys) which is parked out back, but by referring to them you are making implicit reference to the car they go with (which is parked out back). No new word is being created in this process, but a nonce meaning is assigned to the signifier "this" (standing for the keys).

Words can also enter a language by borrowing from another language: this is why English has “pig” (from Anglo-Saxon) and “pork” (from French). This process was once more common with words entering English, but now more frequently exports English words to other languages in the fields of technology and culture (like computers and country music, which usually enter languages as phonetic adaptations of the English words rather than as literal translations, often even maintaining the English spelling).

Aitchison (2003) mentions layering, a process where a word comes to have more than one meaning (polysemy). Over time presumably this process will result in separate lexemes, or a new definition will take over the old. For example, Old English “saelis” meant “happy” or “blessed,” which turned into Middle English “sely,” meaning “pathetically innocent,” and finally became Modern English “silly,” meaning something similar to “stupid.” Yule (2006) discusses semantic broadening and narrowing, where a word’s meaning becomes more or less specific over time. He gives the example of “wife,” which in Old English and early Middle English meant “woman,” but through narrowing has come to its modern definition. Saussure differentiates between the “immutability” of language, meaning that no individual person has the power to profoundly change a language, and “mutability” of language, as in the principle that language changes over time, not due to individual efforts but the nature of language as a system (in Silverman and Torode, 1980). Linguistic change is based on necessity: I can’t decide to call a “cow” a “fireplace” just because I want to—no one would join me and it would be pointless. But when a new concept or object is created it needs a word, and this is what gives us words like “facebook,” “text” (as a verb) and “uncool”.

Another fascinating ground for language change (and one that is affected by individual efforts) is the realm of political correctness. It seems to most people that this changes every few years, which is staggeringly fast given the pervasiveness of most PC or non-PC terms. In my grandmother’s youth, the appropriate term for a developmentally delayed person was an “idiot” (with minimal negative connotation). When my mother was young, this had turned into an insulting term (retaining to a lesser degree the association with developmental disabilities), and the PC term was “retarded.” Nowadays, ask anyone under about 30 years old, and they will tell you that “retard” is insulting. Since “retard,” we have gone through such terms as “mentally handicapped,” “mentally challenged,” “differently abled,” “learning disabled,” “special” and countless others (and in a very short period of time: the postage stamp was issued in 1974 and the special Olympics picture was taken in 2009. This is staggeringly fast for language change). The sad truth is, each time a new word is decided on to be the new “neutral” term, it will be used as an insult. The same is true of racial differences, sexual preference, etc., clearly showing that blaming language for society’s attitudes is useless (Crystal, 2006).

Conclusion/Summary

editThis chapter has provided an overview of some aspects of words. While “word” is not a technical definition, the label “lexeme” allows us to be more specific. A short introduction to morphology was given, outlining the differences between bound/free and inflectional/derivational morphemes, and how new lexemes are formed by affixation. Words were defined as arbitrary signs, with no inherent relationship between the signifier and the signified. Denotation and connotation were discussed, as well as how clear the boundaries are between different words. Register was explained as differentiating words based on level of formality. The concept of a prototype was outlined as the best example of a given word, stored in the brain. The different relationships between words were identified: polysemy (multiple related meanings), homonymy (multiple unrelated meanings), synonymy (two words sharing a meaning), antonymy (two words with opposite meanings) and hyponymy (two words differing based on level of specificity). Words were categorized according to open (new words can be added) or closed (no new words can be added) class, and also according to syntactic categories. The frequency of words both cross-linguistically and in English was mentioned, as well as the effect of frequency on various lexical tasks. The ability of language to change both gradually and quickly was discussed through looking at the adoption of nonce formations and the process of layering. The phenomenon of political correctness was examined as an example of both rapid language change and the impact of social attitudes on language.

In conclusion, words are not static but dynamic: their meanings can change, divide, converge and disappear. Words are controlled by all competent speakers of a language, and are a remarkably fluid medium of communication.

References

editAdams, V. (1973). And Introduction to Modern English Word-formation. London: Longman.

Aitchison, J. (2003). Words in the Mind: An Introduction to the Mental Lexicon. Oxford: Blackwell Publishing.

Brown, R. (1958). Words and Things: An Introduction to Language. New York: Free Press.

Copestake, A. and Briscoe, T. (1997). Semi-Productive Polysemy and Sense Extension. In Pustejovsky, J.; Boguraev, Bran. (Eds.), Lexical Semantics: The Problem of Polysemy. Oxford University Press. Retrieved 15 March 2011, from <http://lib.myilibrary.com.ezproxy.library.dal.ca?ID=80716>

Crystal, D. (2006). Words, Words, Words. New York: Oxford University Press.

Hampton, J. (1991). The Combination of Prototype Concepts. In Schwanenflugel, P. J. (Ed.), The Psychology of Word Meanings (pp. 91-116). Hillsdale, N.J.: Lawrence Erlbaum Associates.

Hudson, Richard. (2007). Language Networks: The New Word Grammar. Oxford University Press. Retrieved 15 March 2011, from <http://lib.myilibrary.com.ezproxy.library.dal.ca?ID=75671>

Hughes, G. (1988). Words in Time: A Social History of the English Vocabulary. Oxford: Basil Blackwell Ltd.

Lively, S. E., Pisoni, D. B., & Goldinger, S. D. (1994). Spoken Word Recognition. In M. A. Gernsbacher (Ed.), Handbook of Psycholinguistics (pp. 265-301). New York: Academic.

Nunberg, G. (1997). Transfers of Meaning. In Pustejovsky, J.; Boguraev, Bran. (Eds.), Lexical Semantics: The Problem of Polysemy. Oxford University Press. Retrieved 15 March 2011, from <http://lib.myilibrary.com.ezproxy.library.dal.ca?ID=80716>

Pinker, S. (1999). Words and Rules: The Ingredients of Language. New York: Basic Books.

Saussure, F de. (1974). Course in General Linguistics. Fontana: Collins. (Originally Published 1916).

Schwanenflugel, P.J. (1991). Why are Abstract Concepts Hard to Understand? In Schwanenflugel, P. J. (Ed.), The Psychology of Word Meanings (pp. 223-250). Hillsdale, N.J.: Lawrence Erlbaum Associates.

Silverman, D. & Torode, B. (1980). The Material Word: Some Theories of Language and Its Limits. London: Routledge & Kegan Paul Ltd.

Simpson, G. B. (1994). Context and the Processing of Ambiguous Words. In M. A. Gernsbacher (Ed.), Handbook of Psycholinguistics (pp. 359-374). New York: Academic.

Yule, G. (2006). New York: Cambridge University Press.

Learning Exercises

editThe following are questions to enhance your learning and understanding of the above material. In answering, consider evidence from this chapter and from your own experience with words (or other research you have done in this area).

Word Relationships

editIdentify and explain the relationship between these pairs or groups of words. For example, hyponyms, synonyms, etc. There may be more than one answer. Answers are given at the bottom of the page.

a) Seat-Pew

b) Raise-Lower

c) Cat-Feline

d) Blue-Aquamarine

e) Chair-Table

f) Dark-Light

g) Distasteful-Gross

h) Foot-Head-Pay

i) Visionary-Idealist

j) Toilet-Powder Room

k) Vista-Play (noun)-Scene

l) Strong-Weak

m) Animal-Calf

n) Inebriated-Drunk-Wasted

o) Present-Absent

p) Bottle-Container

q) Die-Pass Away

Essay Questions

edit1. Discuss the differences between Saussure's concept of the sign (signifier/signified) and Aitchison's idea of fuzzy edges (with evidence from Labov). Do they disagree, or do their ideas support each other? Do you agree with one or with both? Write a brief essay, using support from both authors.

2. We have learned that words are fluid. Explain the reasons and results of this fluidity, using both semantic and grammatical evidence. What kind of words are not fluid, and why? Write a short essay (several paragraphs). Come up with your own examples.

3. Most of the examples above come from English. Discuss the advantages and drawbacks of this in several paragraphs--is it more effective to look at words from the viewpoint of a single language, or to examine the concept of "word" always in a cross-linguistic context? If you are familiar with another language, discuss the processes and ideas identified in this chapter in relation to that language. Can you discover any radical differences, or do words exhibit similar behaviours and patterns regardless of language? If you do not know another language, verb paradigms, pronoun charts and many other simple tools can easily be accessed on the internet. Find a source like this for another language and compare it to English.

Labelling

editThe following are concepts that need labels.

a) The process of change in measurements of groundwater radiation levels over time

b) Moving back home with your parents after having moved out to go to university

c) The condition of seeing real life as a two-dimensional world of violence as a result of playing too many video games

d) The fatigue felt after reading a lengthy textbook on psycholinguistics

e) Anxiety experienced over not knowing the answer to an exam question

f) A person who lives a very modest life, while having a fortune in the bank

Make up a new word to label these things. Your term can be made up of more than one typographic word (i.e. it can have spaces in it, as in "flower pot") but should be as succinct as possible. It must not be a phrase, but instead must function syntactically as one unit. (This is crucial if something is to be considered a word.) Then explain in a paragraph or two why your term is a good label for the concept, and why it could be adopted as the general term in English (although of course this probably won't happen).

BONUS: Identify all the morphemes used in your words. Are they free or bound? What categories do they create? Are they derivational or inflectional?

Answers from question 1

edit1: (a) Pew is a hypernym of Seat. (b) Raise and Lower are reversive antonyms. (c) Cat and Feline are synonyms, but Feline belongs to a higher register and is often restricted to scientific discourse. (d) Blue is a hyponym of Aquamarine. (e) Chair and Table share the associative meaning of "furniture". (f) Dark and Light are gradable antonyms. (g) Distasteful is of a higher register than Gross. (h) Foot is polysemous. It can share the associative meaning of "body part" (with Head) or be a synonym of Pay (as in "foot the bill"). (i) Visionary has a positive connotation, whereas Idealist often has a negative connotation. (j) Powder Room is a euphemism for Toilet. (k) Scene is polysemous. It can share an associative meaning of "theatre terms" with Play, or can be synonymous with Vista. (l) Strong and Weak are gradable antonyms. (m) Animal is a hyponym of Calf. (n) These words are of different registers. Inebriated is a formal register, Drunk is quite neutral, and Wasted is casual or colloquial. (o) Present and Absent are non-gradable antonyms. (p) Bottle is a hypernym of Container. (q) Pass Away is a euphemism for Die.